The Israeli military relies on AI to search for Hamas fighters. It acts almost autonomously, accepting civilian casualties and suspecting tens of thousands.

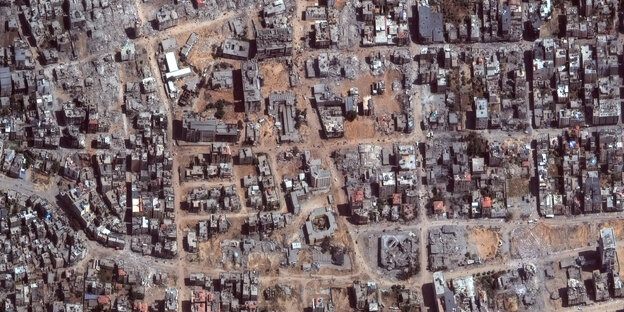

Total destruction and civilian casualties are accepted. Photo: Maxar Technologies/Reuters

Current research illustrates the dystopia of war in the Gaza Strip. According to online Israeli-Palestinian media +972 magazines The Israeli military uses an artificial intelligence program called “Lavender” to find Hamas targets. In total, the AI labeled at least 37,000 people as “terrorists” and approved their execution.

According to information from whistleblowers, civilian casualties are knowingly accepted. For low-ranking Hamas fighters, 15 to 20 civilian casualties are tolerable. High-ranking commanders even tolerate the deaths of more than 100 civilians.

Equally shocking is the fact that apartment blocks where families live are being deliberately bombed because, according to the military, it is easier to capture targets if they are with their families.

One source reports that they spend about 20 seconds checking the AI's rating. During this time she simply checks to see if the target is a man.

“Lavender” acts almost completely autonomously. One source reports that for a human that the AI classifies as a suspect, it takes about 20 seconds to verify the classification. During this time she simply checks to see if the target is a man.

Other findings, quite striking, recall other conflicts, such as the American “War on Terror” in Afghanistan and elsewhere. In 2012 it became known that Washington considers any “male of military age” in the context of a drone attack to be an “enemy combatant” per se, unless proven otherwise. “Lavender” is not limited to Palestinian minors either.

In fact, mass killings using AI are a direct result of the “push-button killing” established in the wake of the counterterrorism wars of the past two decades. Any understanding of ethics, morality and the rule of law is lost in this type of war.

Furthermore, it is not successful, generates new terrorists and is unreliable. The disastrous outcome of the war in Afghanistan also makes this clear: many of the Taliban leaders who were killed in recent years after supposedly precise drone operations now rule in Kabul and are stronger than ever.